The Gymshark black Friday sale - A lesson in software resiliency and best practices

In the realm of e-commerce, Gymshark's Black Friday sale (weirdly, launched on a Thursday this year!) has become a noteworthy case study, shedding light on the intricacies of handling a high-traffic website during peak periods.

As we delve into the challenges faced during this event, it's important to recognise the commendable efforts of the Gymshark engineering team. In the world of software engineering, we've all found ourselves navigating the delicate balance between anticipation and chaos during crucial launches. Reflecting on this event offers valuable insights for us, with a nod to the shared experiences of those who grappled with unforeseen challenges at Gymshark.

In this article we will take an educated guess at the issues the Gymshark website might have encountered and explore practical solutions to them. These lessons contribute to a deeper understanding of how to build websites that can gracefully handle extremely high traffic surges, helping everyone build more resilient services and websites by default.

Problem #1: Essential services encountered a cascade of 500 errors, seemingly reliant on one another.

The initial snag in Gymshark's Black Friday event exposed a critical flaw — essential services tightly woven to the point of collective failure. Multiple unrelated API requests were returning error status codes. This usually means you have coupled your services together too tightly, perhaps running an entire API on one process, or using one un-optimised database for all of your data.

One potential solution to this lies in decoupling high-traffic services, granting them the ability to operate independently. This might mean separating certain functionalities of the website, such as product listings and basket management, into separate databases and deploying distinct services capable of scaling independently of each-other. By doing so, the failure of one service won't trigger a systemic collapse, ensuring a more robust and resilient architecture.

Solution: Decoupling services that aren't related to each-other

Challenge #2: Wishlist chaos, rate limiting, and a potential misalignment between marketing and engineering.

The Gymshark marketing team encouraged their users to add their items to their Wishlist ahead of the sale. If you are going to drive a lot of traffic to one part of your site and its APIs, you must make sure it can handle substantially more load than you expect to receive.

This debacle not only unveiled technical obstacles but also a misalignment between marketing and engineering teams. To avert such crises, it is imperative to scale and load test areas of your site which are intentionally attracting heavy traffic. Moreover, transparent communication between teams is vital, especially when marketing initiatives drive an influx of users to specific pages. Your engineering team should be aware of the comms that marketing are putting out, especially if they are deliberately pushing a high amount of traffic to one page.

Solution: Strengthen high traffic infrastructure and foster inter-team communication

Challenge #3: The UI became stuck in a 'loading state' when APIs failed to respond.

In the event that a user could load the webpages without an internal server error, multiple people were met with another issue - loading spinners that did not stop spinning. Under the hood, the API requests they relied on were failing unexpectedly and the client-side code did not effectively handle this.

A perpetually loading UI is more than a technical issue; it can be a direct blow to user experience. To mitigate this, we should always design client-side pages to anticipate API failures. Implement features that dynamically respond to errors, such as halting loading spinners, displaying clear error messages, and providing user-friendly options like manual reloads or automatic retries with an exponential backoff. This proactive UX approach ensures that users remain informed and in control of what is happening in their session, even in the face of unforeseen technical glitches.

Solution: Prioritise user experience from the start, baking it into your engineering values

Challenge #4: The translations API joined the breakdown party

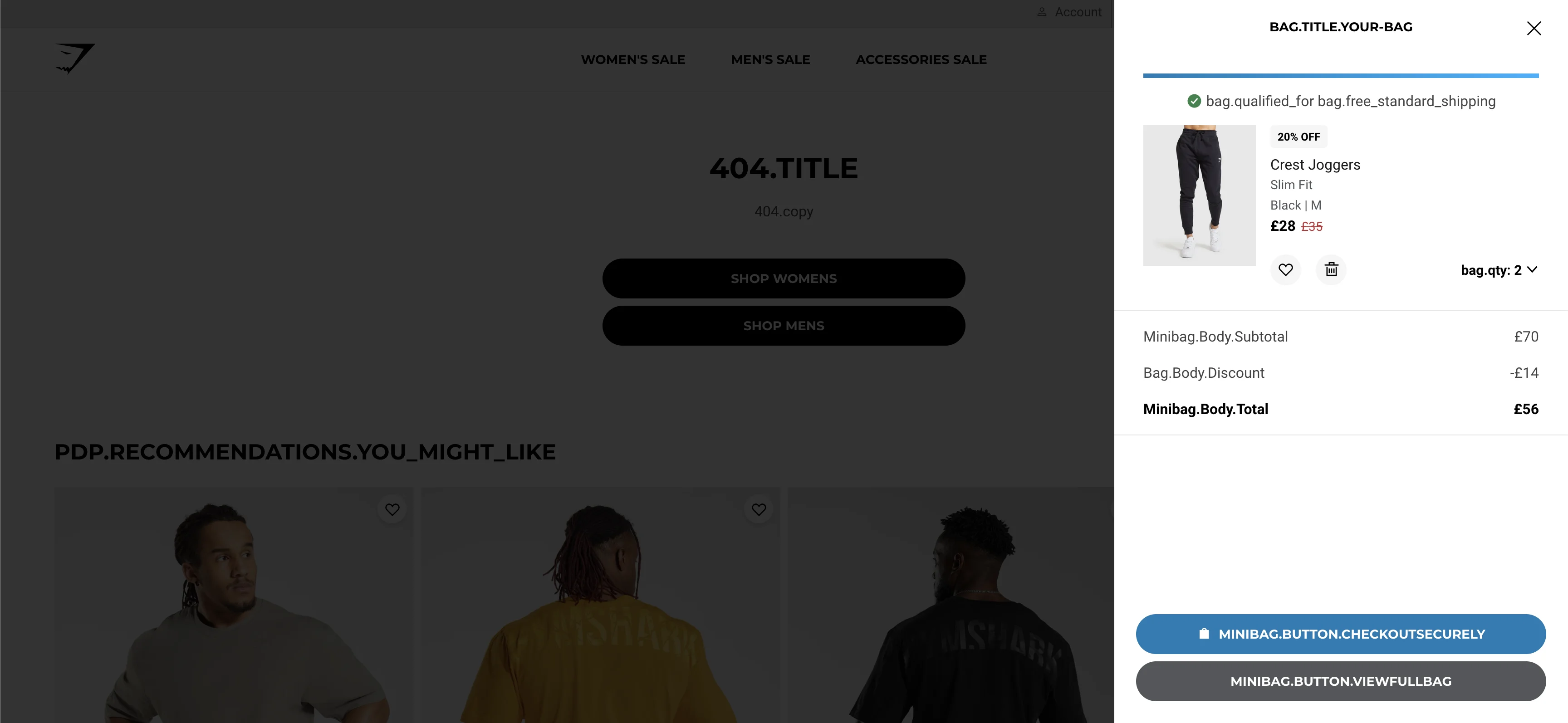

As mentioned previously, when multiple business critical APIs fall over simultaneously, its a bad time for everyone involved. When your translations API is also coupled to this misbehaving beast, you have another problem entirely. At the height of the issues that Gymshark were experiencing, their translations API calls also failed, presenting customers with screens that looked something like this:

This adds to the confusion that the user is experiencing, and also likely means that any nice generic error messages we would have liked to have shown about the downtime on site, would have been un-readable translation keys which are not much good to anyone.

To fix this, we can implement robust caching strategies and backup solutions, like storing translations in local storage and having a long lived cache on these requests. These measures ensure that, even in the midst of high traffic and API failures, critical functionalities remain accessible and information can be shown to users correctly.

Solution: Distribute APIs, implement caching, implement redundancy methods

Challenge #5: Gymshark's social media response was vague - “our website is lagging!”

A crucial aspect of crisis management is informative customer support. Gymshark's vague response left users in the dark, exacerbating frustration. Crafting responses that provide clear information about the situation, acknowledge high traffic volumes, and offer estimated recovery times can significantly improve user perception. Informed users are more likely to exercise patience and await a resolution.

A better response might have been: “Our website is experiencing a high amount of traffic at the moment, impacting some of our core services. Please watch this thread for updates and we will let you know as soon as you will be able to browse our products and make purchases again”.

Solution: Craft informative support responses which help take load off of the team and the affected services

Challenge #6: The absence of an 'oh shit' button.

In situations where everything seems to go awry, having a contingency plan is essential. An 'oh shit' button, introducing a queueing system for the website, could have significantly improved the user experience during Gymshark's Black Friday ordeal. By temporarily limiting the flow of traffic to essential services, this feature would have alleviated strain and allowed users who could access the website to actually make purchases while the services were scaled up in the background.

Solution: Introduce a panic button

Challenge #7: Proper load testing wasn't performed ahead of time.

To be clear, this is purely speculation based on our experience in the industry. More often than not, companies will either not load test their website, load test their websites with insufficient traffic or load test their website using site browsing patterns that are not akin to their user's.

Remember, loading the heavily cached homepage of your website 1000s of times concurrently without any issue is an achievement, however, a more helpful test in this scenario could be to load the homepage, log in, visit your Wishlist page and add a few items to the basket. This will simulate user behaviour much more accurately than just loading the home page multiple times, and will likely show up inefficiencies in your services and websites more effectively, allowing you to rectify them before the big day.

This approach allows developers to rectify issues before they manifest on a larger scale, emphasising the importance of strategic and behaviour-driven load testing in the preparation phase.

Solution: Test strategically

In conclusion, Gymshark's Black Friday sale serves as a great case study in navigating the intricacies of managing a high-traffic website during peak periods. We extend sincere recognition to the Gymshark engineering team for their commendable efforts during this challenging event. We hope that this case study will help businesses understand the real world technical implications associated with the go-live of high traffic product sales and other large launch events. It's also important to remember, that to have these kinds of problems, you have to have amassed a customer base that caused them. So we tip our hat to you, Gymshark, for your viral marketing and product quality is a modern day wonder.